Understanding Reasoning Models: AI Systems Designed to Think

Understand what sets reasoning models apart and how they enable AI to analyze, plan, and solve tasks more reliably.

Back in September 2024, which feels like ages ago now, OpenAI dropped their o1 models on us. These were different. They actually took time to think before responding. The models showed impressive results on physics, biology, and chemistry benchmarks. But here's what really mattered: they introduced a tradeoff that's now part of your daily life in 2025. Want to solve harder problems? Sure, but you'll need to spend more compute and wait longer for answers. This balance between what you can do, how long it takes, and what it costs has become the core of working with reasoning models.

o1 wasn't just another scaled-up model. It represented a real shift in how these systems work. The model focused on thinking during inference and checking its own outputs. This changed how we think about performance versus resource consumption and response times. Today in 2025, the whole reasoning model category builds on these ideas. You can push for higher accuracy on complex tasks, but you have to decide if the extra deliberation is worth the cost and delay. If you want to understand the transformer model architectures that make all this possible, check out our comprehensive guide.

Let me walk you through how modern reasoning models actually work in 2025. We'll look at where o1 started this trend, how today's models build on that foundation, and how to pick the right approach for what you're trying to do.

Foundations of Reasoning Performance

Two key breakthroughs kicked off the reasoning era. Both came into focus with o1 and then spread everywhere.

Extended thinking at inference time

Post-training helped, sure. Reinforcement learning and preference tuning moved things forward. For a deeper dive into how reinforcement learning from human feedback shapes model behavior and safety, our practical guide on reinforcement learning from human feedback covers implementation and safeguards step by step. But then researchers let the model think longer at inference. Accuracy jumped significantly. More test-time computation gave the model space to refine intermediate steps, check its work, and arrive at better answers. You control this knob in many systems now. More steps usually means better results, but also higher costs and longer waits.

Verifying outputs with consensus voting

The second breakthrough was consensus. Have the model generate multiple solutions, then pick the most common or best verified one. Simple idea, big gains with just a modest number of samples. In one math benchmark example, accuracy went from 33 percent to 50 percent using consensus voting. Think of it as structured self-checking that turns small improvements into reliable wins.

Leveraging the generator-verifier gap

Some problems are easier to check than to solve in one go. Sudoku, algorithmic coding tasks, lots of math problems fit this pattern. If you can verify candidate answers quickly, you can let the model explore and then keep only what passes the checks. That's the generator-verifier gap. When you have a robust verifier, more inference-time compute really pays off. When you don't, the model needs to get it right immediately, which limits how much extra thinking helps.

From o1 to the 2025 Reasoning Stack

Those foundations changed how models approach complex work. In 2025, you see this change in three practical ways.

From immediate answers to deliberate reasoning

Earlier models often just spit out the first plausible answer. Fine for easy questions. Not so great for multi-step work. Reasoning models take a different approach. They think first, then answer. This deliberate process reduces those brittle failures and improves reliability on hard tasks.

Mastering complex tasks with structured thought

Reasoning models use structured scratchpads. You might hear this called chain of thought, tree search, or hypothesis testing. The label doesn't really matter. What matters is the behavior. The model explores options, evaluates intermediate steps, and prunes bad paths. On a tough math or coding problem, this looks like stepwise progress, checks, and corrections. You get a well-supported answer rather than a guess.

Less prompt micromanagement, smarter interactions

You don't need to hand-hold with elaborate prompts for many tasks anymore. Modern reasoning models figure out intent and plan useful steps with less instruction. For practical techniques on guiding models with minimal prompting, see our article on in-context learning strategies. It covers prompt engineering and example-driven approaches. You still get better results with clear goals and constraints. You just don't have to script the entire path. This makes your interactions simpler without sacrificing depth.

But here's an important caution!

When you need strict adherence to precise instructions, deliberate reasoning models can be frustrating. They run internal chain-of-thought that often nudges them to reinterpret your request. If you need exact formatting, rigid templates, or step-by-step compliance, go with a non-reasoning instruction follower instead. Something like GPT-4.1 often sticks more closely to clear directions with less drift. Actually, in many cases, you don't want reasoning mode at all. Turn it off when precision and predictability matter more than open-ended problem solving.

How the Reasoning Process Works

Reasoning models integrate thinking into their core operation. They talk themselves through the work, then present a concise result. Here's the simple template you can expect:

Identifying the problem and defining the solution space. The model clarifies goals and constraints.

Developing hypotheses. It proposes options or approaches.

Testing hypotheses. It evaluates feasibility and likely outcomes.

Rejecting unworkable ideas and backtracking. It drops weak paths and revisits earlier steps when evidence contradicts a plan.

Identifying and following the most promising path. It commits to a solution and prepares a clear answer.

This isn't some bolt-on feature. It's a training and inference pattern. That's why it generalizes across domains like coding, quantitative reasoning, strategy, logistics, and scientific analysis.

Understanding Reasoning Tokens

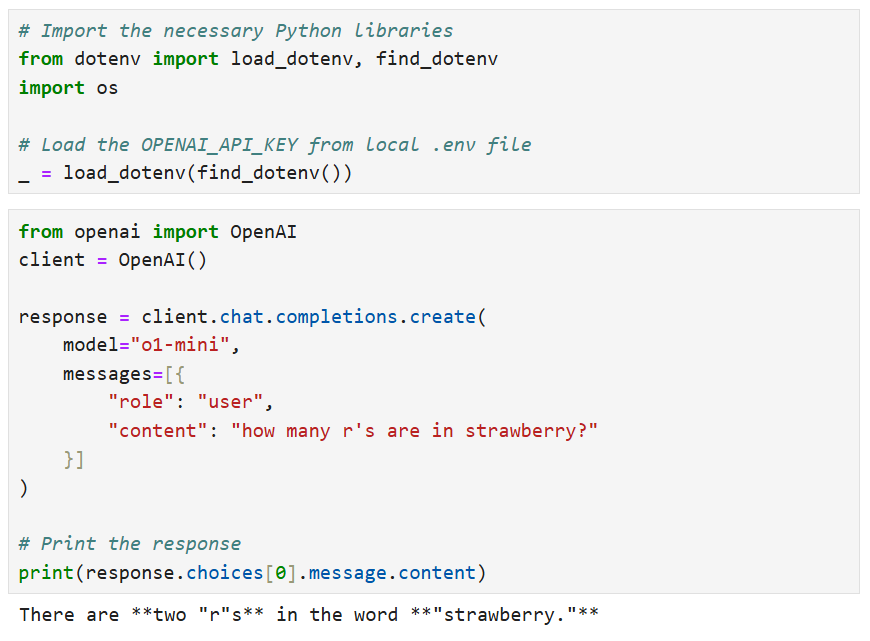

Let me make this concrete with a classic example. How many "r"s are in the word strawberry? Models with internal reasoning handle this easily. The o1 series gives us a good reference point for how token usage looks when a model thinks before answering.

Let's look at the token usage details from this response:

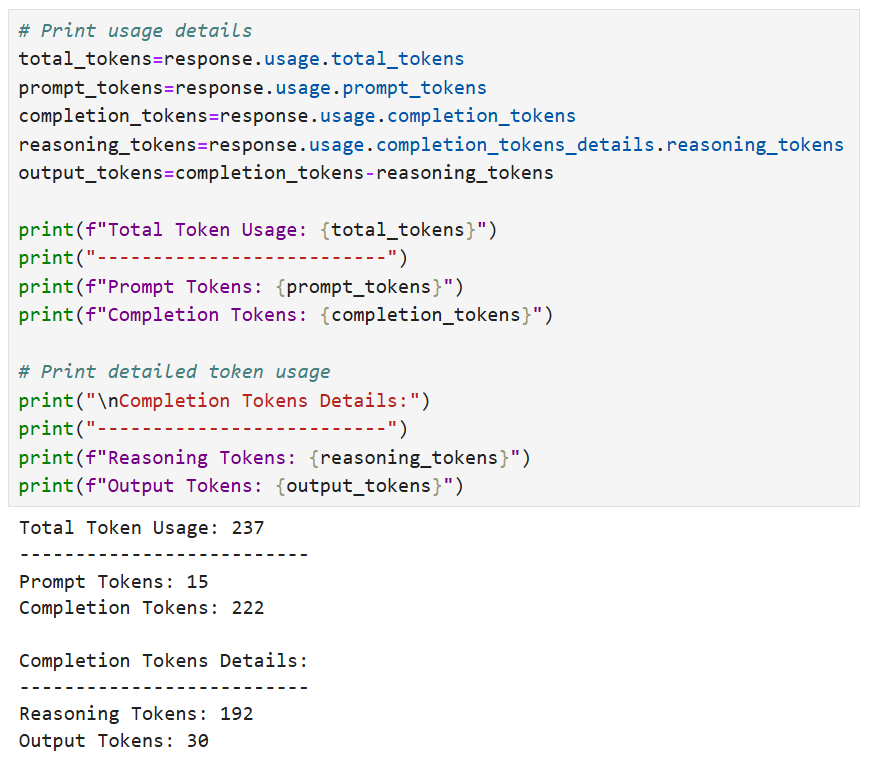

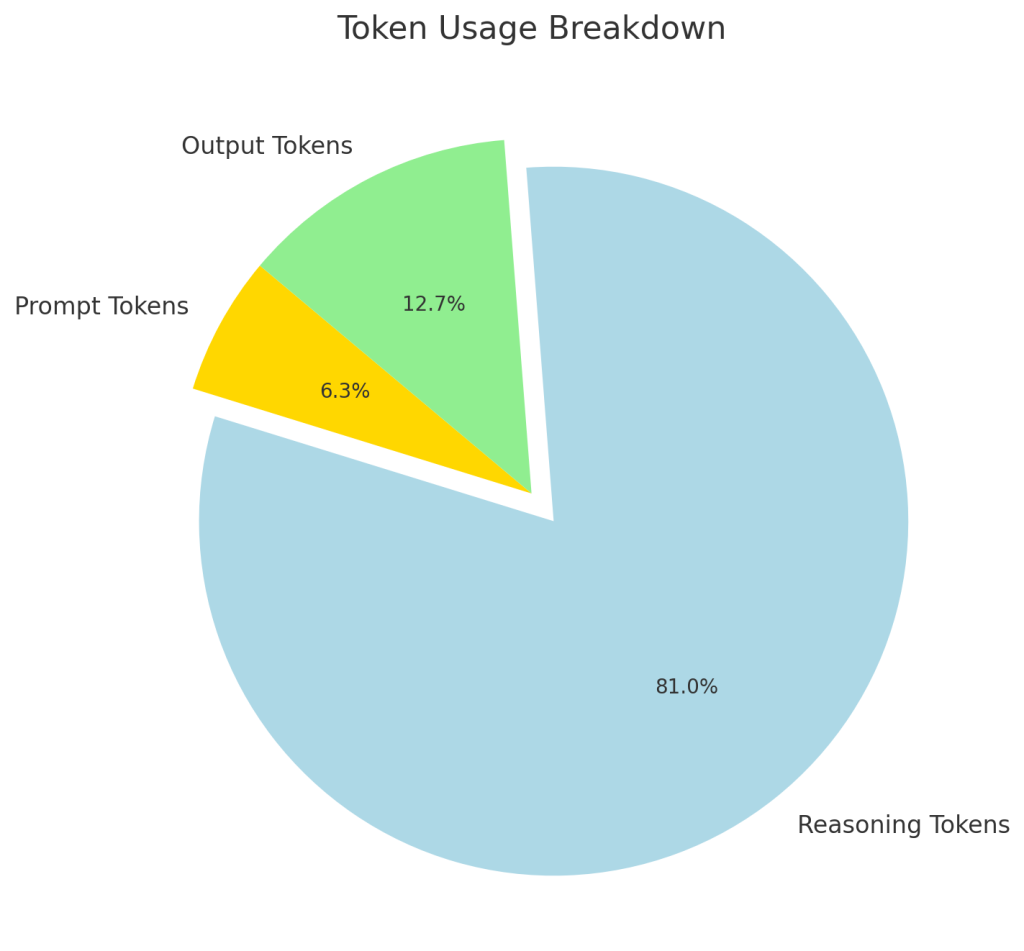

Token usage breakdown

The visible output is short. Maybe around 30 tokens. But the internal reasoning can be several times larger. In the example above, the total reached 192 tokens. About 86 percent of the completion tokens went to hidden reasoning.

Reasoning tokens are internal. They're used to plan, check, and revise. You don't see them in the final answer. Output tokens are the words you actually read. They form the concise response.

Practical implications

This split creates a clear tradeoff. More hidden reasoning can mean better accuracy. It also raises cost and latency, even when the output is brief.

Reasoning tokens are ephemeral. They're generated and discarded during the turn. They don't persist across turns. If you want continuity, ask the model to output its reasoning summary, then feed that back into the next step.

Cost. Reasoning tokens count toward total usage. You can reduce waste by clarifying goals, limiting search breadth, and capping maximum thinking steps. For actionable tips on crafting effective prompts and getting consistent results, check out our guide to prompt engineering best practices.

Context limit. Internal tokens consume context capacity. If your task requires heavy reasoning, plan for that usage so your prompt and final output still fit.

Once you understand and manage reasoning tokens, you can strike the right balance between accuracy, speed, and spend.

The 2025 Reasoning Model Landscape

The market has really broadened since o1 introduced the pattern. You've got several categories to choose from now. Use o1 as your mental baseline, then map your needs to these options.

Model catalogs change constantly. To find current options and see which models are explicitly marked as reasoning, check these provider model listings. Look for labels mentioning reasoning, test-time thinking, chain of thought, or verifier support.

OpenAI models catalog: https://platform.openai.com/docs/models

Anthropic model overview: https://docs.anthropic.com/en/docs/about-claude/models

Google Gemini models: https://ai.google.dev/gemini-api/docs/models/gemini

Meta Llama model family: https://ai.meta.com/llama

Mistral models: https://docs.mistral.ai/getting-started/models

Qwen model family: https://qwenlm.github.io

DeepSeek models and docs: https://api-docs.deepseek.com

Generalist reasoners

Pick a generalist when you need breadth and consistent performance across domains. These models handle complex conversations, multi-step analysis, and synthesis across varied topics. You're trading higher latency and cost for versatility. Use the provider links above to see which current models are positioned as generalist reasoners.

Domain-optimized specialists

Choose a specialist for coding, math, science, quantitative finance, or other technical niches. These models are tuned for structured reasoning and tool use in narrow areas. You get faster responses and lower costs on in-domain tasks with strong accuracy. Check the provider listings to find coding, math, and science-focused variants.

Lightweight and speed-first models

When you need near real-time results, go lightweight. These models use shorter reasoning traces or heuristic shortcuts. They deliver responsive interactions for simpler tasks. Use them for routing, summarization, or quick checks. Verify on the provider pages which compact models are optimized for speed.

Verifier-assisted pipelines

If you can check answers cheaply, add a verifier. Pair a generator with tests, unit checks, symbolic solvers, or external tools. Let the system iterate and keep only the answers that pass. This pattern works great for code, math, data validation, and constrained planning. Browse the provider listings to pick generators that integrate well with your verifiers and tools.

Agentic and tool-rich systems

For multi-step workflows, connect a reasoning model to tools and memory. If you're interested in practical implementation, our field guide to building multi-agent systems with CrewAI and LangChain offers hands-on lessons for orchestrating complex agentic pipelines. Let it plan, call APIs, read documents, and write intermediate artifacts. Use strict evaluators at key steps. This gives you reliability on longer tasks, though it requires careful orchestration. Check the provider pages for tool-use features and API capabilities.

Where might this be used?

Data analysis. Interpreting complex datasets, like genomic results, and performing advanced statistical reasoning.

Mathematical problem-solving. Deriving solutions or proofs for challenging questions in math or physics.

Experimental design. Proposing chemistry experiments or interpreting complicated physics results with clear assumptions and controls.

Scientific coding. Writing and debugging specialized code for simulations, numerical methods, or data pipelines.

Biological and chemical reasoning. Tackling advanced questions that require deep domain knowledge and careful validation.

Algorithm development. Creating or optimizing algorithms for workflows in areas like computational neuroscience or bioinformatics.

Literature synthesis. Reasoning across multiple research papers to form defensible conclusions, especially in interdisciplinary fields.

Planning and operations. Building reliable multi-step plans with verification and tool use.

Conclusion

Reasoning models have really matured into their own category by 2025. The core idea first came into focus with o1. Spend more compute at inference time. Let the model think, check, and refine. You get smarter answers on hard problems, at the cost of more tokens and higher latency.

These models shine when you can iterate and verify. Coding, quantitative reasoning, scientific analysis, legal drafting with citation checks, and structured planning all benefit. You get the best results when you design prompts and workflows that control the amount of thinking, add verification where possible, and keep costs in check.