Transformers: Demystifying the Magic Behind Large Language Models

Gain a practical understanding of the Transformer architecture that gives Large Language Models their extraordinary abilities.

I've spent way too much time diving into how Large Language Models actually work, and I want to share what I've learned. Not the academic stuff you'll find in research papers, but the practical understanding you need to actually use these tools effectively.

A Quick History Lesson (Because Context Matters)

Generative algorithms have been around since the 1950s. Yeah, that long. Back then, we had things like Markov chains, which were basically mathematical systems that tried to predict what comes next based on what's happening now. Pretty basic stuff, but it laid important groundwork.

If you're thinking about getting into this field yourself, I've written up a practical roadmap for aspiring GenAI developers that might help you get started.

The thing is, these early models weren't great. Actually, let me be honest, they were pretty terrible at generating anything that made sense. They'd lose track of what they were talking about after just a few words. Recurrent Neural Networks (RNNs) came along and improved things a bit, but they still struggled with longer texts.

Then 2017 happened. The Transformer architecture showed up and changed everything. Suddenly, these algorithms could match and even beat human performance on all sorts of tasks. It was like watching someone switch from a bicycle to a sports car.

Why RNNs Couldn't Cut It

Before we dive into Transformers, let me explain why RNNs fell short. I think understanding this really helps you appreciate what makes Transformers special.

RNNs processed text one word at a time, like reading a book letter by letter while trying to remember everything that came before. They maintained something called a "hidden state", basically a temporary memory that tried to keep track of context. Based on that context, they'd predict the next word.

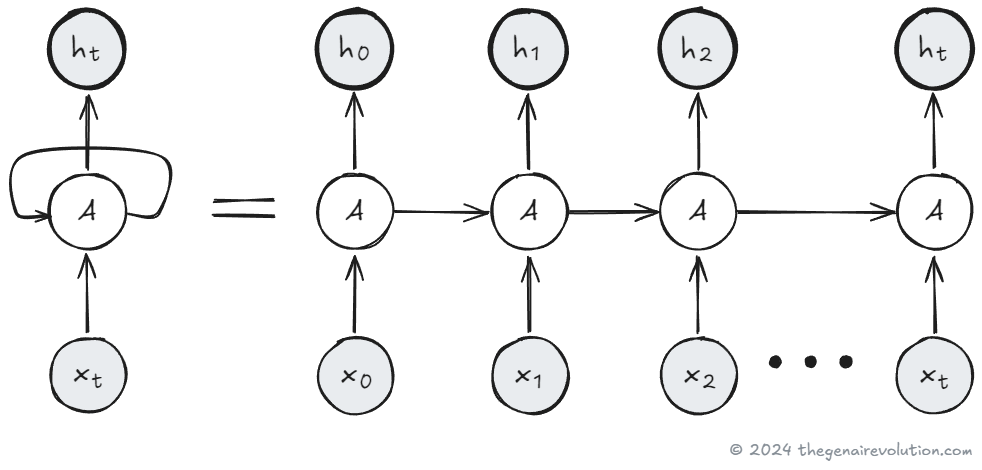

This diagram shows how we unfold a Recurrent Neural Network over time. The left side shows the compact representation, while the right side shows the network unrolled from t=0 to t=T. The variable xt represents the input at time step t, ht represents the hidden state at time step t, and A is the RNN cell that processes the input and previous hidden state to generate the new hidden state.

Here's where things got messy. First problem: you couldn't parallelize anything. The model had to wait for each word to be processed before moving to the next one. On modern GPUs built for parallel processing, this was incredibly inefficient.

Second problem, and this one's crucial: the hidden state could only hold so much information. As sequences got longer, the model would start forgetting earlier parts. We call this the "vanishing gradient problem." Imagine trying to remember a phone number someone told you five minutes ago while they keep talking. Eventually, you lose those first few digits.

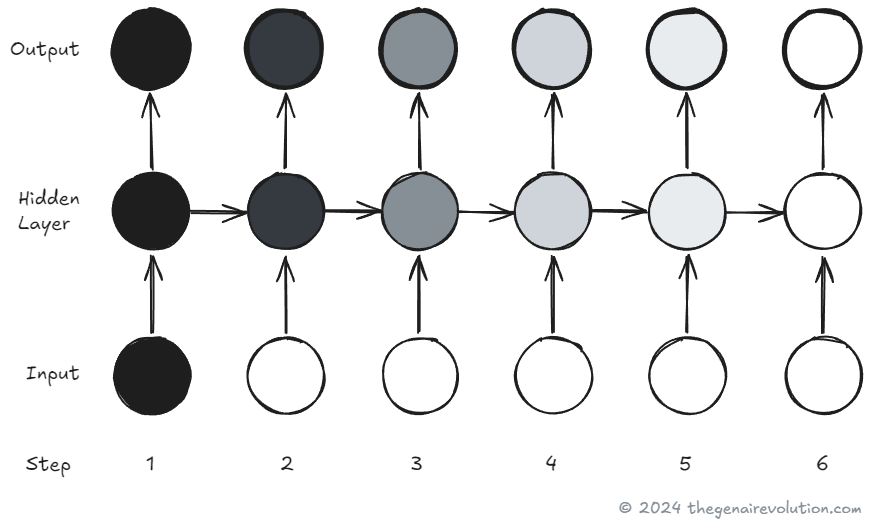

The illustration shows a Recurrent Neural Network processing a sequence of inputs, with each hidden state depending on the previous one. As the sequence progresses, the fading color in the hidden layers visually represents the vanishing gradient problem. You can see how the network gradually loses its ability to retain information from earlier time steps, making it difficult to capture long-range dependencies.

Language Is Complicated (Really Complicated)

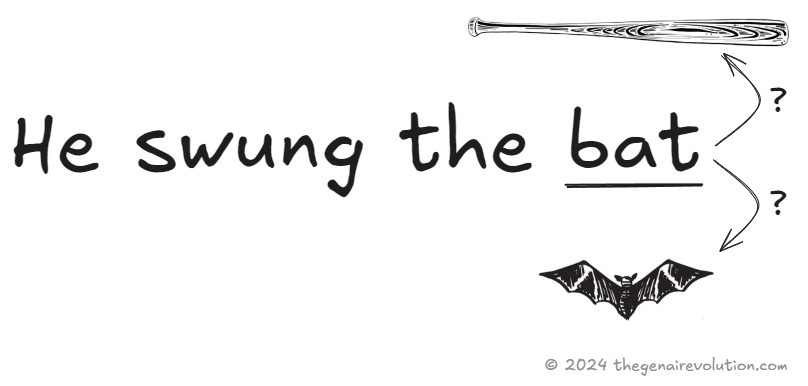

Here's something I've come to appreciate: language is incredibly complex. Take a simple sentence like "He swung the bat." Are we talking about baseball or a flying mammal? The model needs context to know.

Or consider "John saw Annie with the telescope." Who has the telescope? John or Annie? Without understanding the full context, sometimes even the entire document, a model can't make these distinctions. RNNs just couldn't handle this level of complexity.

Enter the Transformer

The Transformer architecture solved these problems in 2017. But it wasn't just one improvement. It was several breakthroughs working together.

First, Transformers could process words in parallel. This meant they could leverage modern GPU architecture and train on massive datasets much faster. But honestly, the real magic came from something called self-attention, which I'll explain in a bit.

Today, Transformers power everything from ChatGPT to image generators to voice synthesis models. They've become the foundation for pretty much all modern AI applications.

The Self-Attention Magic

Okay, this is where things get really interesting. Self-attention is what gives Transformers their superpower.

Instead of looking at words one by one like RNNs, self-attention lets the model look at all words in relation to all other words simultaneously. Every single word gets to "attend" to every other word in the text. The model learns attention weights that determine how important each relationship is.

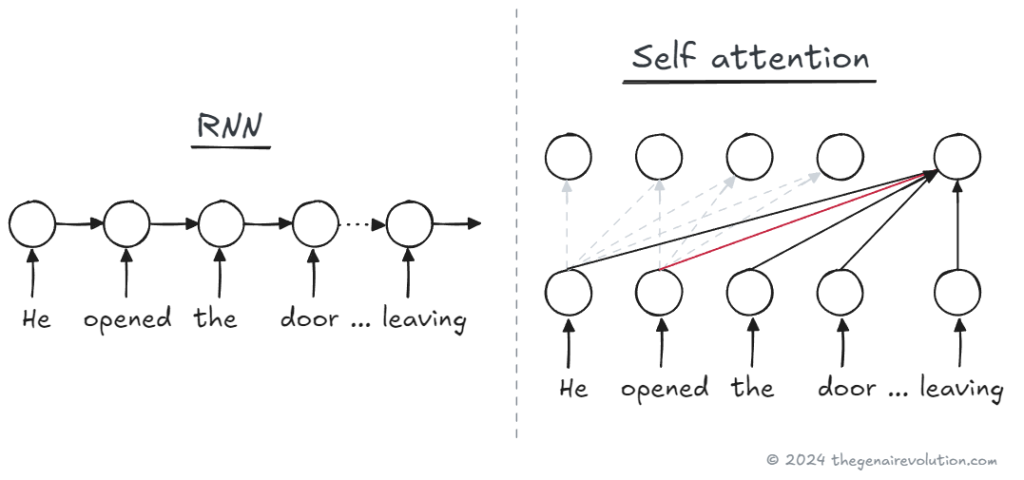

This illustration contrasts a Recurrent Neural Network with a self-attention mechanism. On the left, the RNN processes the sentence sequentially, passing information from one word to the next. On the right, the self-attention mechanism allows direct connections between all words, enabling the model to consider relationships between distant words simultaneously, as shown by the connecting lines. This illustrates how self-attention can capture long-range dependencies and contextual information more effectively than RNNs.

Think about it this way. When you read a sentence, your brain doesn't just process it word by word. You're constantly making connections between different parts, understanding how the beginning relates to the end, how pronouns connect to their subjects. That's essentially what self-attention does mathematically.

These models learn attention patterns during pre-training on massive text datasets. If you want to see how they can learn new tasks without retraining, check out my deep dive into in-context learning techniques.

How Transformers Actually Work

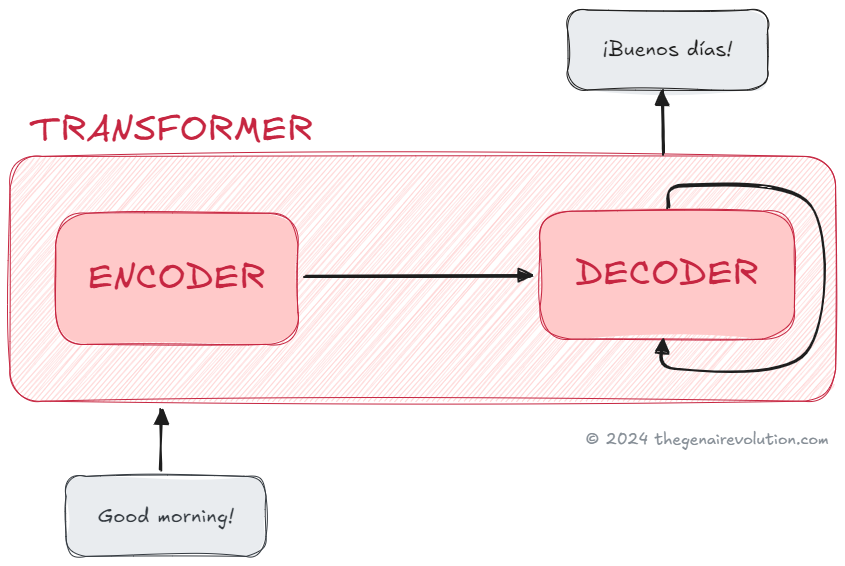

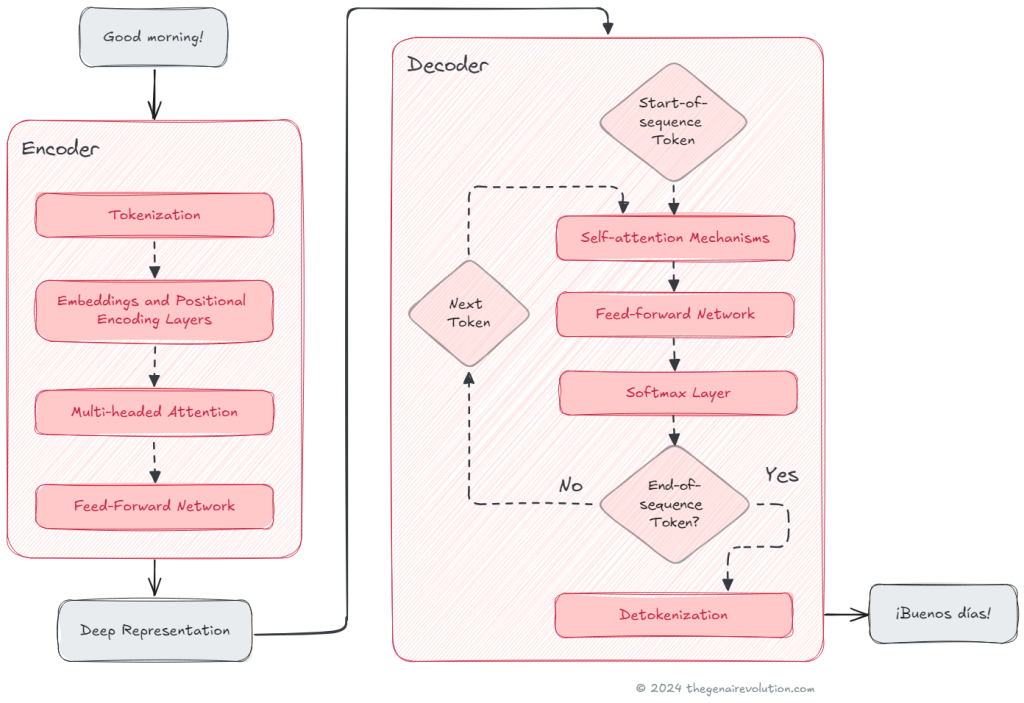

Let me walk you through what happens when a Transformer processes text. The original architecture has two main parts: an encoder and a decoder.

The encoder's job is to understand the input text and create a mathematical representation of it. It captures all the relationships and context. The decoder then uses this representation to generate output text, predicting one word at a time based on what the encoder understood and what it's already generated.

Starting with Tokens

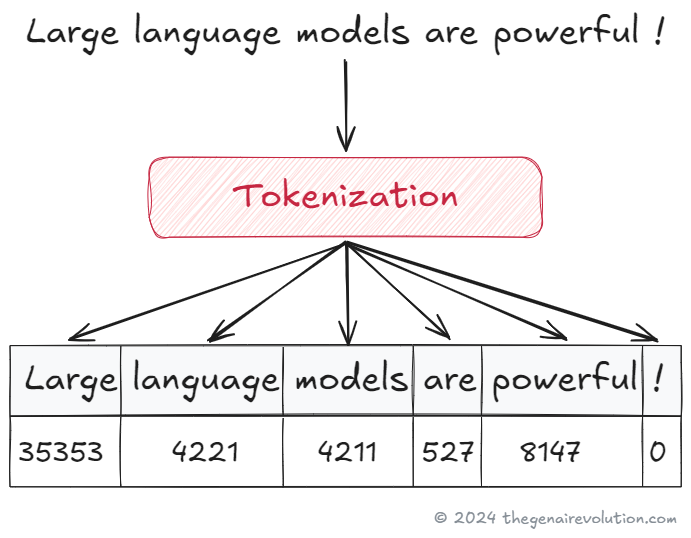

Models can't read words. They need numbers. So the first step is tokenization, converting text into token IDs. Each ID corresponds to a position in the model's vocabulary list.

Different models use different tokenization methods. Some break text into whole words, others into subwords or even characters. The key thing is consistency. Whatever method was used during training must be used during inference.

The Embedding Space

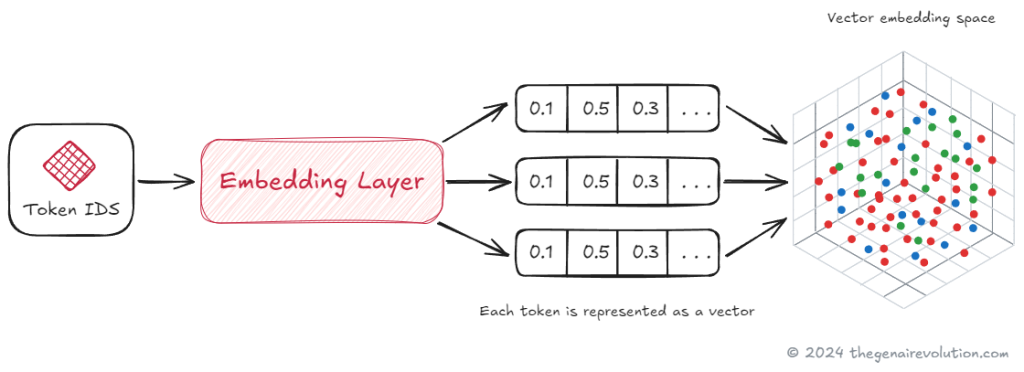

Once we have token IDs, they go to the embedding layer. This is where things start getting mathematical, but stay with me.

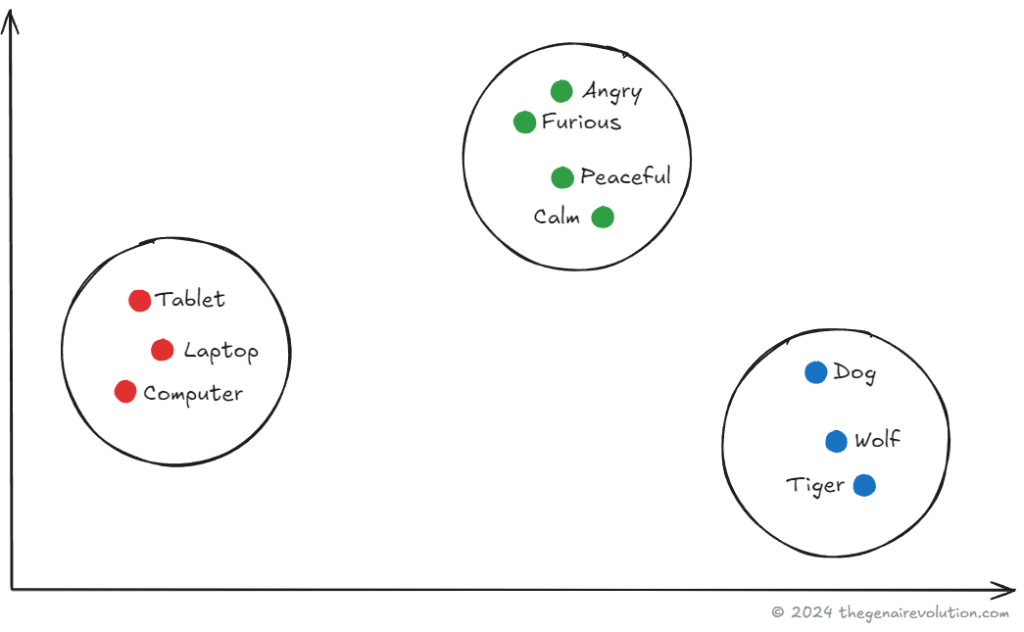

Each token becomes a multi-dimensional vector. Think of it as giving each word coordinates in a massive space. In this space, similar words end up near each other. "Dog" might be close to "puppy" and "canine," while "car" would be far away.

The original Transformer used 512-dimensional vectors. Modern models like GPT-4 use over 1,500 dimensions. More dimensions mean more nuance in capturing word meanings.

Actually, this isn't entirely new. Earlier methods like Word2Vec used similar ideas. But Transformers take it much further.

This image illustrates word embeddings, showing how semantically related words like "Dog", "Wolf", "Tiger" cluster together in a 2D space, highlighting their relationships based on meaning.

Don't Forget Position

Here's a problem. If the model processes all words in parallel, how does it know word order? "Dog bites man" is very different from "Man bites dog."

That's where positional encoding comes in. The model adds position information directly to each word's vector. Now each vector contains both meaning and position.

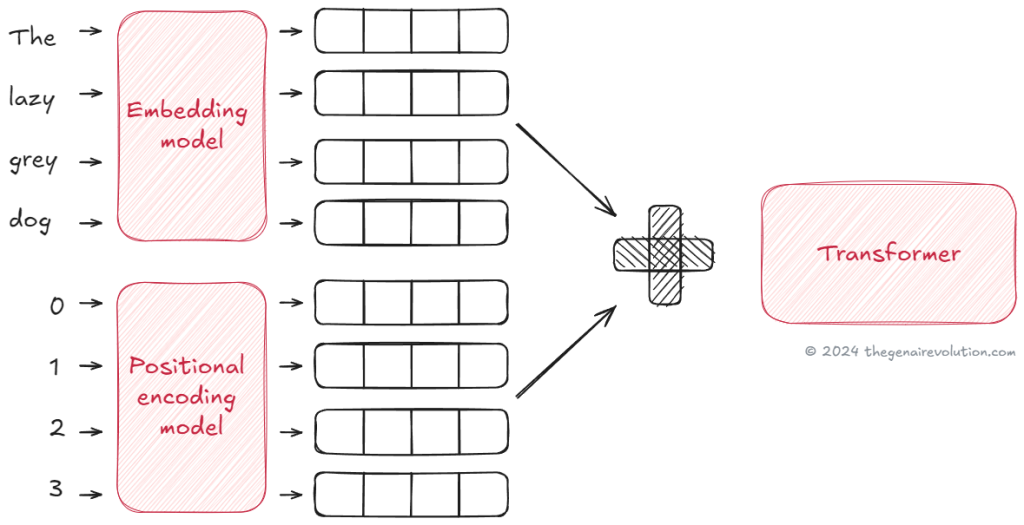

This image illustrates how embeddings and positional encoding work together in a Transformer model. Words are converted into vector representations by the Embedding model, capturing their semantic meanings. At the same time, the Positional Encoding model assigns a unique position to each word in the sequence, encoding the order of words. These two sets of information are then combined, ensuring the Transformer can understand both the content and the sequence of words in the sentence.

Multi-Headed Attention (The Real Magic)

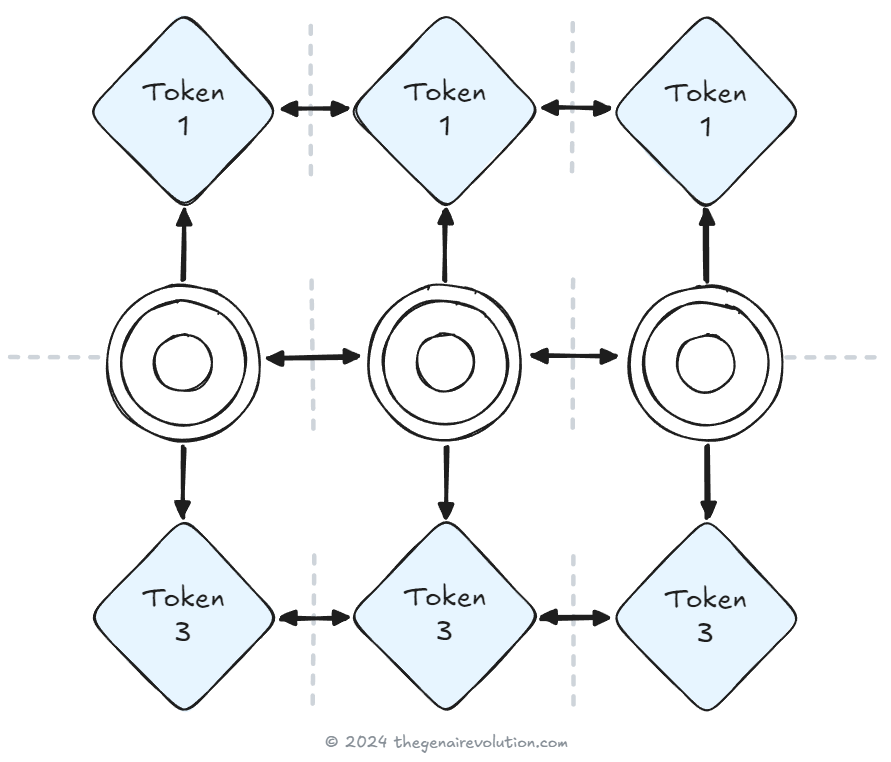

Now we get to the heart of it. The model computes multiple sets of attention weights in parallel. We call these "heads."

Each head learns to focus on different aspects of language. One might track subject-verb relationships. Another might focus on adjectives modifying nouns. A third might identify cause and effect.

The fascinating part? We don't tell the heads what to learn. They figure it out during training. Models typically have anywhere from 12 to over 100 attention heads, each discovering different patterns in language.

Processing Through Networks

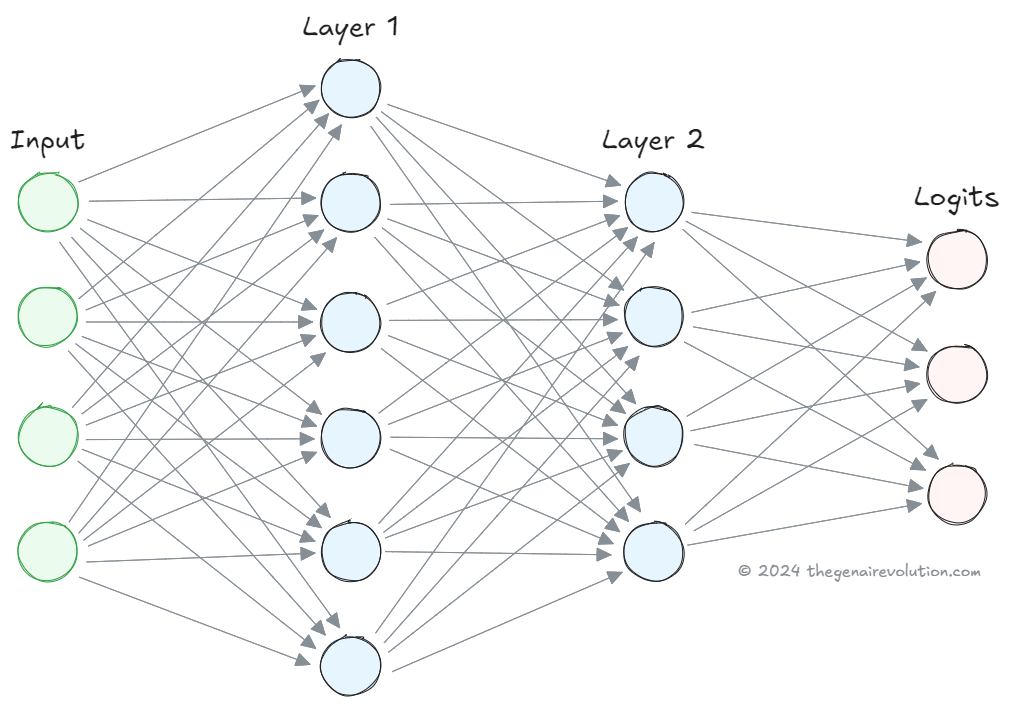

After attention, the vectors pass through feed-forward neural networks. These are basically layers of mathematical transformations that refine the model's understanding.

This image represents a fully-connected feed-forward neural network, where each node in one layer is connected to every node in the next layer, illustrating the flow of information from the input layer through hidden layers to the output layer.

These networks output "logits", raw scores for each possible next word. They're not probabilities yet, just numbers indicating how likely each word is.

Making the Final Choice

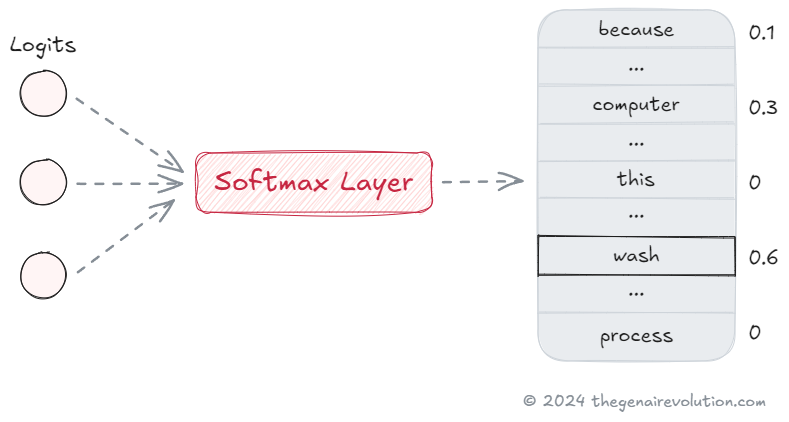

The softmax layer converts logits into actual probabilities. Every word in the vocabulary gets a probability score between 0 and 1, all adding up to 100%.

The model then selects the next word based on these probabilities. There are different strategies here. Sometimes it picks the highest probability. Sometimes it samples randomly based on the distribution. I'll cover these techniques in another post.

Putting It All Together

Let me trace through the complete process:

Text gets tokenized into IDs

IDs become embedding vectors with position information

Vectors go through multi-headed attention layers

Feed-forward networks process the attention output

Softmax converts logits to probabilities

Model selects the next token

Process repeats until we hit an end token

Tokens get converted back to text

Different Flavors of Transformers

The original Transformer had both encoder and decoder. But we've developed variations for different tasks.

Encoder-Only Models

Models like BERT focus purely on understanding text, not generating it. They're great for classification, sentiment analysis, or extracting information. The encoder processes the entire input at once to build a rich understanding.

Other examples include RoBERTa and DistilBERT. These models excel when you need deep comprehension but don't need to generate new text.

Decoder-Only Models

This is what most people think of with LLMs. GPT, Claude, LLaMA. These models generate text by predicting the next word based on previous words. They're built for writing, conversation, and creative tasks.

For improving accuracy in retrieval tasks, you might want to explore cross-encoder reranking techniques that can significantly boost performance.

Encoder-Decoder Models

Models like T5 and BART use both components. They're perfect for translation or summarization where you need to deeply understand input text then generate different output text. The encoder processes the input, the decoder generates the output.

What This Means for You

Understanding how Transformers work isn't just academic curiosity. It helps you use these tools more effectively.

When you know about attention mechanisms, you understand why providing clear context matters. When you understand tokenization, you know why certain prompts work better than others. When you grasp the parallel processing nature, you appreciate why these models can handle such long contexts.

We're still discovering what these models can do. But they're already transforming every field from medicine to education to creative arts. For practical applications, check out my guide on building multi-document agents that shows how to leverage these capabilities in production systems.

The Transformer architecture didn't just improve language models. It revolutionized how machines understand and generate not just text, but images, audio, and video too. By understanding these layers and mechanisms, you're better equipped to harness their power in whatever field you're working in.

And honestly? We're just getting started.