Model Context Protocol (MCP) Explained

Understand how MCP standardizes tool and data access so your agents interoperate, audit safely, and ship faster, consistently across environments.

You know what happens when you try to swap GPT for Claude in production? Nine out of fourteen tools break. Just like that. Your security team throws up their hands because they can't certify 23 different connectors that all do things slightly differently. This mess happens because every model provider has their own special way of implementing function calling. OpenAI does it one way, Anthropic does it another, and don't even get me started on Gemini. The argument coercion is all over the place. Invocation policies? Completely different. Schema formats? Good luck finding consistency there.

The Model Context Protocol (MCP) fixes this whole situation by basically saying "enough is enough" and decoupling tool semantics from model APIs. It uses this standardized host, client, and server architecture that actually makes sense. I'll show you how MCP keeps tool definitions separate from all those provider quirks, how the discovery handshake enforces least privilege (which your security team will love), and how structured error contracts make those scary side-effecting tools actually auditable and predictable.

Why this matters

Schema drift across providers breaks tools silently. Here's the thing - OpenAI's function calling wants strict JSON Schema. Claude? It uses a completely different tool-use format. And Gemini has gone off and invented its own conventions. So when you switch models (and you will), argument types start mismatching. Parameters that were optional suddenly become required. Error handling changes in ways you didn't expect. Every single integration becomes this one-off adapter that just keeps expanding your maintenance and audit surface. It's exhausting.

Connector proliferation blocks security review. Every bespoke integration you build introduces a new trust boundary. Your security team - bless them - has to certify input validation, output masking, and error propagation for each connector. With 23 connectors? That's 23 separate audits. But when you standardize on MCP, you collapse all of that down to a single protocol review plus per-server capability checks. Much more manageable.

Non-deterministic tool behavior undermines testing. Without a standard contract, the same tool behaves differently depending on which model invokes it. You'll see retries when you don't expect them, partial writes that shouldn't happen, or worse - silent failures that you only discover in production. MCP brings structured errors and idempotency, which makes behavior predictable. And predictable behavior means your tests actually mean something across providers.

How it works

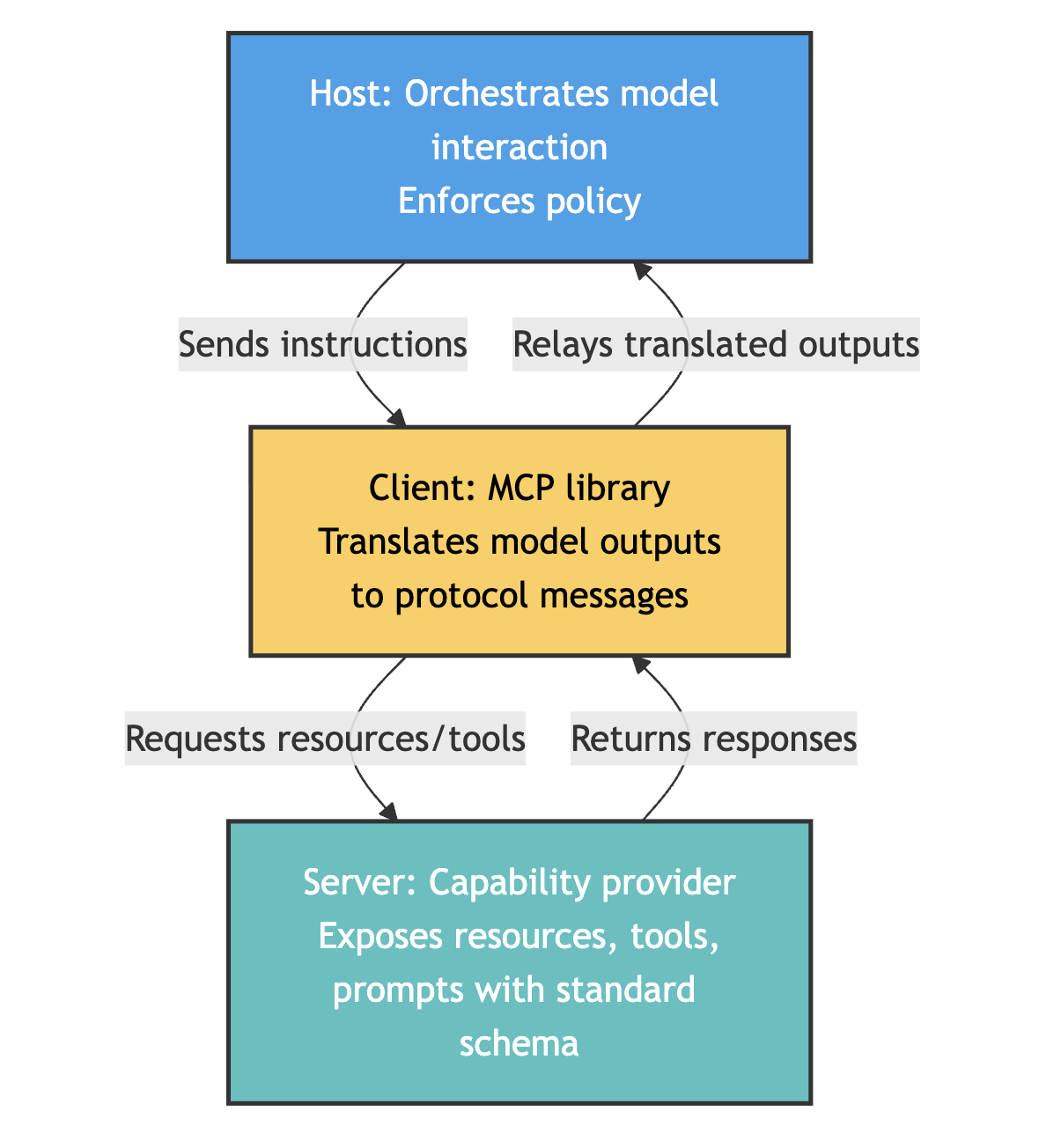

Host, client, and server split decouples semantics from execution

The architecture is actually pretty straightforward once you see it:

Host. This is your application that orchestrates model interaction and enforces policy.

Client. The MCP library that translates model outputs into protocol messages.

Server. Your capability provider that exposes resources, tools, and prompts with a standard schema.

What this split means in practice is that you can swap OpenAI for Claude by changing only the host's model API call. You don't have to rewrite tool definitions. You don't have to touch your business logic. It just works.

Discovery handshake surfaces capabilities with least privilege

When a connection happens, the server declares what it can do - lists out resource URIs, tool schemas, prompt templates. The host then applies your policy, filtering that list down to only what the current task actually needs. The client receives this pruned capability set, and the model only sees what it's allowed to invoke.

Ask yourself: What does this specific task actually need to read or write? Which tools should be visible? Which resource paths are safe? This simple, deterministic handshake replaces all those ad hoc permission checks that tend to get scattered across connectors in ways nobody can track.

Structured schemas remove prompt-engineered parsing

Resources define read endpoints with typed outputs using JSON Schema. Tools declare their input parameters, output shapes, and error codes. Prompts define reusable templates. The model gets machine-readable contracts, not some vague free-text description that it might misinterpret. You don't need fragile regex parsing or clever prompt hacks to extract structured data anymore. (If you're curious about how LLMs handle growing context and why they sometimes struggle, check out the guide on context rot and why LLMs "forget" as their memory grows.)

Idempotency and retriable errors make side effects safe

Every tool invocation includes an idempotency key. So if the model retries after a transient failure (and it will), the server deduplicates the request. Errors come back with a code, a message, and a retriable flag. The host can tell the difference between a database timeout that should trigger a retry and an invalid parameter that should abort. This transforms unreliable model-driven tool chains into auditable, repeatable workflows.

A quick example

Let me walk you through a create_ticket tool to show how this works:

Input schema: title as string, severity as enum [low, medium, high], description as string (optional).

Output schema: ticket_id as string, url as string.

Errors: INVALID_PARAM (not retriable), TIMEOUT (retriable), RATE_LIMIT (retriable).

Here's the flow:

The server advertises

create_ticketduring discovery. The host filters it in only if the task is incident triage.The model proposes a call with inputs. The client validates against JSON Schema. The server enforces required fields and constraints.

The server executes with an idempotency key. If a retry arrives with the same key, you return the cached result.

If the upstream system times out, you return code TIMEOUT with retriable set to true. The host decides whether to retry with backoff or escalate.

And here's the beautiful part - you can run this exact same tool contract with OpenAI, Claude, or Gemini. You don't change the schema. You don't change the server logic. It just works.

What you should do

Define tool schemas with strict types and version pinning

Use JSON Schema with explicit types, required fields, constraints, and enums.

Pin schema versions in the tool definition. This avoids those silent breaking changes that ruin your weekend.

Include fields like name, version, input.properties with type and required, output.properties, error.code, and error.retriable.

Validate every request on the server. Reject malformed inputs with non-retriable errors.

Implement least-privilege filtering at the host

Maintain an allowlist that maps tasks to capabilities.

Before discovery completes, filter the server's capability list to what the task actually needs.

Log every capability you surface to the model. Set up alerts if the model attempts to invoke a tool that's not on the allowlist.

Adopt idempotency keys and structured error codes for write tools

Require an idempotency key on any tool that mutates state. No exceptions.

Store keys with a TTL (I usually go with 1 hour). Return cached results for duplicates.

Return errors with code, message, and a retriable boolean. Use codes like TIMEOUT and INVALID_PARAM.

Set alerts for more than 2 percent tool error rate or repeated non-retriable failures. These often signal schema mismatches or the model getting confused.

Set performance and observability budgets

Target p95 capability invocation latency under 250 milliseconds, including validation.

Cache resource reads with a TTL over 1 minute where it's safe to do so.

Instrument every tool call with start and end timestamps, input hash, output size, and error code.

Export metrics to your observability stack. Use these signals to figure out whether the model, the network, or the server logic is your bottleneck.

Migration plan you can follow

Inventory current tools. List inputs, outputs, side effects, and known error cases.

Define JSON Schemas. Add enums, min and max values, and formats like email or uri where they make sense.

Wrap tools behind an MCP server. Keep the original implementations for now. Focus on protocol compliance first.

Add idempotency and structured errors. Pick stable error codes. Document which ones are retriable.

Implement host-side allowlists. Start with read-only capabilities. Expand as you gain confidence.

Run shadow traffic. Compare outputs and latency across providers. Log discrepancies against schemas.

Cut over provider by provider. Swap the host model call. Don't change tool definitions.

Monitor and tune. Watch error rates and retries. Update schemas and prompts only with version bumps.

Common pitfalls and how to avoid them

Implicit type coercion. Don't rely on the model to coerce types. Enforce with JSON Schema and server-side validation.

Schema evolution without pinning. Always version inputs and outputs. Deprecate old versions with a clear window.

Side effects without idempotency. Enforce idempotency keys on any write path. Actually test duplicate submissions.

Overexposed capabilities. Default to deny. Only surface tools and resource paths needed for the task at hand.

Leaking secrets or PII in logs. Hash inputs for metrics. Mask sensitive fields at the server.

Context bloat from verbose capability lists. Keep capability catalogs small. Hide prompts and tools that the task will never use.

Conclusion: Key takeaways

MCP decouples tool semantics from provider APIs through standard capability contracts, discovery handshakes, and structured error shapes. You avoid schema drift. You collapse the audit surface. You make side-effecting tools deterministic and testable.

Honestly, you should adopt MCP when you're targeting multiple model providers or managing three or more tools. The benefits show up immediately. If you're bound to a single provider with stable function calling, you can probably defer. But revisit when vendor lock-in risk grows or when you need parity across environments.

If you're planning to run across providers like OpenAI, Gemini, and Anthropic Claude, use MCP to isolate tool semantics from model APIs. The host can swap providers without rewriting integrations, which keeps your experiments honest. You're comparing models, not connector quirks.

And if you're still weighing the benefits of different model sizes for your stack, take a look at the article on small vs large language models and when to use each. For a practical process to evaluate and select a model for your app, the guide on how to choose an LLM is worth your time.