How to implement zero-trust security for AI. Isolation and egress

Implement zero-trust security for production AI with sandboxed agents, strict egress allowlists, and hardened identity paths. Shrink blast radius, prevent data exfiltration, and prove ROI with auditable controls.

AI agents are integration engines. They call APIs, query databases, read documents, and execute code on your behalf. Each tool, credential, and network path is a potential pivot point. Traditional perimeter security assumes trust inside the boundary. That assumption completely breaks when agents operate at machine speed across dozens of services.

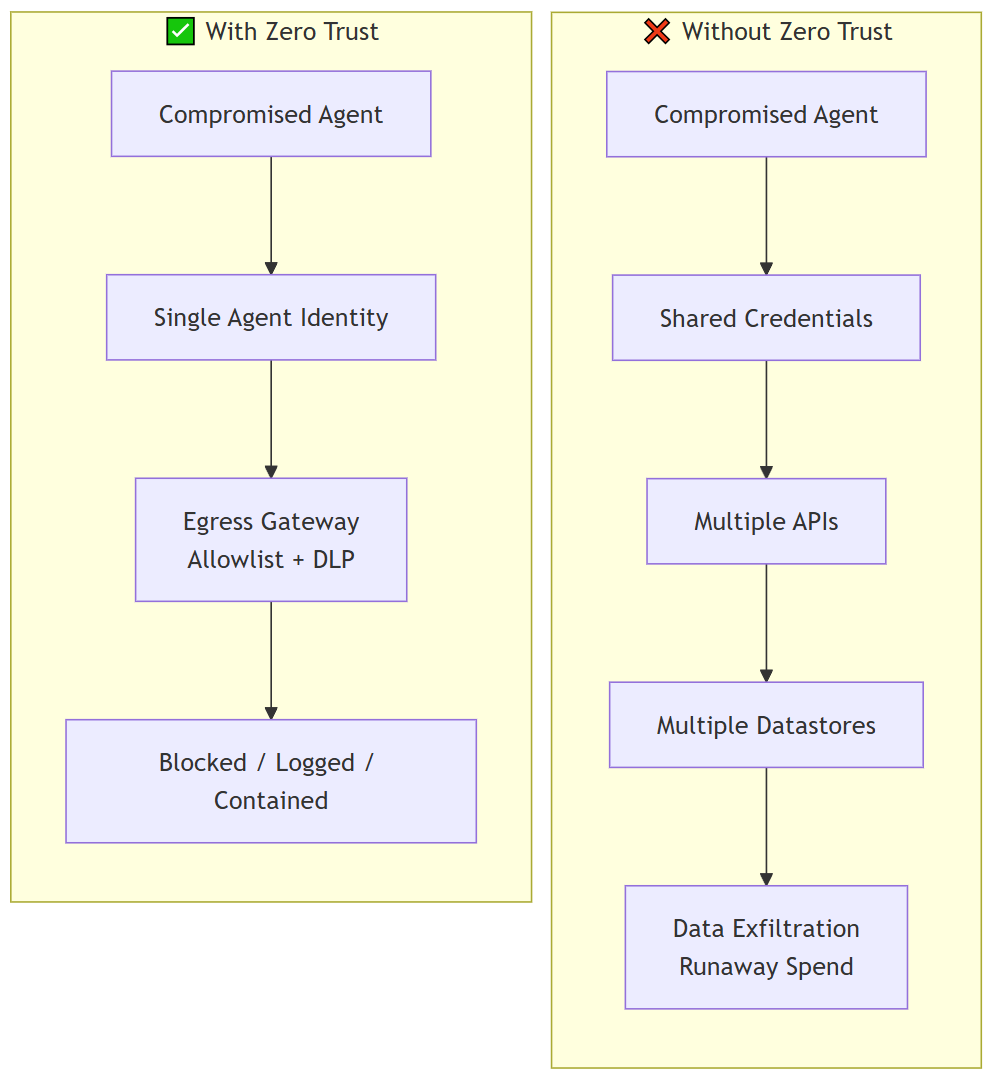

Here's the thing. One compromised tool, one leaked API key, or one successful prompt injection can fan out into multiple systems faster than you can blink. Data exfiltration and runaway API spend are two of the most common business impacts I've seen.

Zero trust security means you assume breach, verify every request, and enforce least privilege at every boundary. For AI, the boundaries aren't just network segments anymore.

They include tool permissions, identity issuance, runtime environments, and outbound network paths. The goal is simple: make agent failures survivable. When compromise happens, and it will, it should be contained to one agent, one identity, and one narrow set of destinations.

This article gives AI leaders a concrete implementation plan focused on two controls that move the needle immediately: agent isolation and egress lockdown. You'll also learn how to harden identity paths and prove compliance. By the end, you'll have policy decisions, minimum bars for production, and metrics for ROI that you can enforce across your organization.

Implement agent isolation

Agent isolation means each agent runs in its own execution environment with its own identity and its own set of permissions. No shared credentials, no ambient authority, no cross-agent access by default. This is non-negotiable.

Execution environment isolation. Run each agent in a separate container, VM, or serverless function. This limits the blast radius when one agent gets compromised. And yes, I said when, not if. Serverless platforms like AWS Lambda, Google Cloud Functions, or Azure Functions provide strong isolation by default and fast patching with minimal ops overhead. If you already have strong platform engineering, Kubernetes with network policies and pod security standards is a solid choice. But please, avoid shared application servers or monolithic deployments where one breach can access all agent logic. I've seen this go wrong too many times.

Per-agent credentials. Issue a unique identity to each agent. Use workload identity federation on cloud platforms or service accounts with short-lived tokens. Never share API keys across agents. Actually, let me be clearer about this. Never embed long-lived secrets in code or environment variables. Use secret managers like AWS Secrets Manager, Google Secret Manager, or HashiCorp Vault to inject credentials at runtime. Rotate credentials automatically and revoke them when an agent is decommissioned. This isn't optional anymore.

Least privilege by default. Grant each agent only the permissions it needs to perform its specific tasks. If an agent only reads from a database, don't give it write access. If it only calls one external API, don't give it access to others. Use IAM roles, scoped API tokens, and database-level permissions to enforce this. Document the permission set for each agent in a permission sheet that maps agent name, identity, tools, and allowed actions. This becomes your audit trail and your policy enforcement point. Trust me, you'll thank yourself later when audit season comes around.

Monitoring and logging. Log every agent action, including tool invocations, API calls, and data access. Capture prompt and response metadata where allowed, but redact regulated data and secrets. Use structured logs with agent identity, timestamp, action type, and result. Send logs to a centralized system like AWS CloudWatch, Google Cloud Logging, Azure Monitor, or an ELK stack. Set up alerts for anomalies such as unexpected API calls, permission denials, or high-volume activity. For a practical blueprint on introducing agents with strong controls and auditability, see our guide on controlled AI agents: a minimal, auditable enterprise pattern.

Lock down egress

Egress control means you define exactly which external destinations an agent can reach and block everything else. This prevents data exfiltration, limits lateral movement, and stops agents from calling unapproved services. It's surprisingly effective.

Deploy an egress gateway. Route all outbound traffic through a gateway that enforces an allowlist of approved domains and IP addresses. Cloud-native options include AWS NAT Gateway with security groups, Google Cloud NAT with firewall rules, or Azure Firewall. For more control, use a proxy like Squid, an API gateway like Kong or Apigee, or a service mesh like Istio or Linkerd with egress policies. The gateway should log every outbound request and deny anything not on the allowlist. No exceptions.

Build and maintain the allowlist. Start by identifying every external service your agents need to call. I mean everything. LLM APIs, SaaS tools, databases, internal APIs. Document the domain, port, and protocol for each. Add these to your allowlist. Review the list quarterly and remove any destinations that are no longer needed. Require approval from security and platform teams before adding new entries. This process forces teams to justify every external dependency and prevents shadow integrations. It's a bit of work upfront, but it pays dividends.

Enforce the allowlist at the network layer. Use firewall rules, security groups, or network policies to block all outbound traffic except what's explicitly allowed. On Kubernetes, use NetworkPolicy resources to restrict egress at the pod level. On cloud platforms, use VPC egress rules or private endpoints to limit connectivity. Test enforcement by attempting to reach an unapproved destination and confirming the request is blocked. Actually test it. Don't just assume it works.

Block sensitive data classes from leaving. Use data loss prevention tools or egress inspection to detect and block sensitive data in outbound requests. Cloud providers offer DLP services like AWS Macie, Google Cloud DLP, or Azure Information Protection. Configure rules to flag or block API calls that contain PII, credentials, or proprietary data. This adds a second layer of defense if an agent is tricked into exfiltrating data. To learn how to systematically assess and mitigate these risks, explore our resource on how to test, validate, and monitor AI systems.

Harden identity paths and prove compliance

Identity hardening means you control how agents authenticate, how long their credentials last, and how you prove they followed policy. This is where the rubber meets the road for compliance.

Federated identity and SSO. Integrate agent identity with your organization's identity provider using SAML, OIDC, or OAuth. This lets you enforce MFA, conditional access, and centralized revocation. If an employee leaves or a credential is compromised, you can revoke access instantly across all agents. Cloud workload identity federation services like AWS IAM Roles Anywhere, Google Workload Identity, or Azure Managed Identity make this straightforward. Well, relatively straightforward.

Short-lived tokens. Issue tokens that expire in minutes or hours, not days or months. Use token refresh flows to extend sessions only when needed. This limits the window of exposure if a token is leaked. Most cloud IAM systems support automatic token rotation. Configure your agents to request new tokens before expiration and fail closed if refresh fails. The shorter the better, honestly.

Audit logs and compliance evidence. Collect logs that prove who did what, when, and with what authority. Include agent identity, action type, resource accessed, and result. Store logs in an immutable, tamper-proof system like AWS CloudTrail, Google Cloud Audit Logs, or Azure Activity Logs. Retain logs according to your compliance requirements, typically 90 days to 7 years depending on industry. Map your controls to common frameworks like SOC 2, ISO 27001, NIST 800-53, or GDPR to simplify audits and risk assessments. Your auditors will love you for this.

Business case and ROI metrics. Zero trust controls reduce incident response time because you can isolate the affected agent without taking down the whole platform. They lower compliance risk because you can prove access paths and data handling with logs. They also control costs because egress gateways and quotas prevent unbounded API usage when something goes wrong. And something always goes wrong eventually. Track mean time to detect, mean time to respond, spend variance per agent, and audit readiness score. Establish a baseline before implementing controls, estimate expected reduction in incidents and costs, and report quarterly trends with incident narratives. For frameworks and real-world examples on quantifying the value of AI security investments, see our article on measuring the ROI of AI in business.

What to do now

Start with a pilot. Choose one high-risk agent, isolate it, lock down its egress, and measure the impact. Use the results to build the business case for broader rollout. Start small, prove value, then scale.

Assign ownership. Security sets policy. Platform engineering enforces the paved road. Application teams declare intent and maintain allowlists. Audit consumes evidence. Make these roles explicit in your operating model. Everyone needs to know their part.

Make zero trust a release gate. Block production deployments if an agent uses shared credentials, has no egress allowlist, or lacks audit logging. Automate the checks in your CI/CD pipeline. No exceptions, no matter how urgent the release seems.

Review and iterate. Audit your allowlists quarterly. Rotate credentials automatically. Run tabletop exercises to test your incident response. Zero trust isn't a one-time project. It's a continuous practice that evolves with your AI systems. The threat landscape changes, your systems change, so your security posture needs to change too.